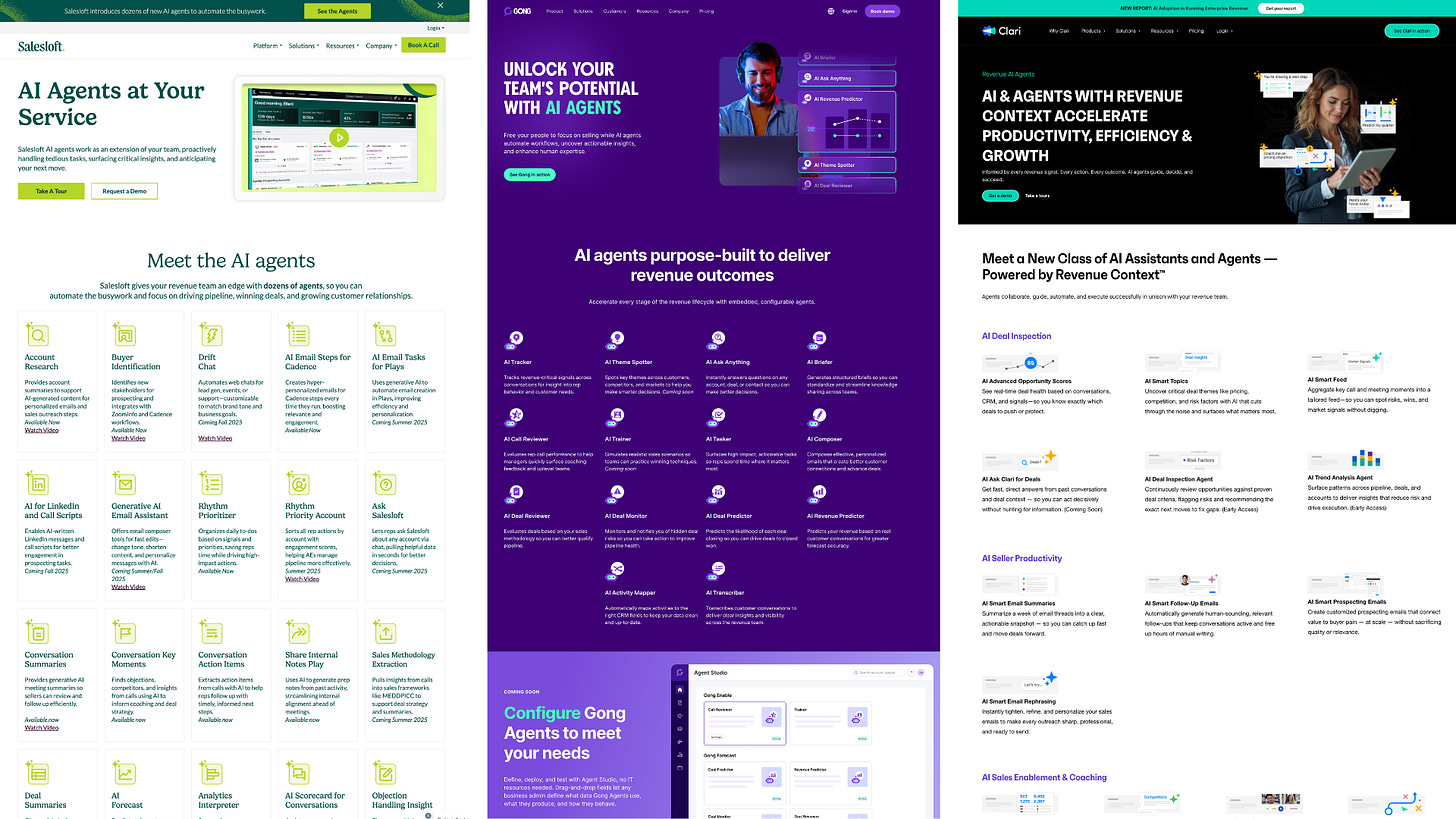

Hey, have you heard about AI agents? All your favorite sales tech vendors sure have. I mean just look at this:

This is just the start—it doesn’t even include Marc Benioff’s quest to become the world’s “largest supplier of digital labor”.

We’re not immune to it at Gradient Works, either. Our AI Account Researcher is, in fact, an agent.

It’s not just GTM tech. Google’s gaga for agents. Microsoft has agent mania.

Over on LinkedIn you’ll find people managing “swarms” of agents, putting them in org charts or proudly sharing how they’re saving hundreds of hours of each month (supposedly).

There are lots of think pieces and about how agents will change sales and lots of hyper-specific technical advice for building agents. However, there’s very little to help a sales leader get a handle on how to think about agents without getting too deep in the weeds.

This article aims to change that. My goal is an Agent 101 for Sales Leaders: just enough detail to evaluate vendor claims, get an idea of what’s possible, and deal with the messy reality of AI agents in 2025.

What is an agent?

Like a lot of AI terms, “agent” seems to mean different things to different people. So what actually is an agent?

Believe it or not, the AI computer science course I took back in 2002 was all about agents. Chapter 2 of the textbook started with this sentence: “An agent is anything that can be viewed as perceiving its environment through sensors and acting upon that environment through effectors.” They describe the goal of an agent as simply “do a good job of acting on their environment.”

Meanwhile in the non-academic world, every vendor and LinkedIn poster calls anything with AI an “agent” because it sounds cool. To help cut through the noise, here’s a useful way to think about what people might mean when they say “agent”:

Assistants respond to prompts with information

Workflows perform predefined steps with some AI help

Agents use AI to determine steps to take and tools to use to achieve a goal

The above is loosely based on Anthropic’s taxonomy of “agentic systems”. That document is way more technical than we need, so I’ve simplified it a bit here.

Let’s look at each of these “agentic” categories in detail.

Assistants

An assistant takes a simple prompt and provides a coherent response. This is the way most of us use ChatGPT, Claude, Gemini, etc today. We ask a question (“Who wrote the Declaration of Independence?”) or request a certain behavior (“Draft a polite email to a prospect named Bob who just told me he needs a 50% discount to sign today”) and they do it.

Assistants might maintain some information to help tailor their responses and may be able retrieve certain resources to help with responding to prompts. They do not, however, go and do things on the user’s behalf.

If you see anyone call their custom GPT an agent, they’re really talking about an assistant.

Workflows

Workflows are what they sound like: multi-step automated processes. The difference between OG workflows (like you might set up for approvals in Salesforce) and ones that purport to be “agents” is that new workflows may use AI in one or more of their predefined steps.

For example, the “agent” I shared in my AI Beyond the Prompt post was really just a workflow. It gets triggered when an email hits an inbox, it runs some steps that are a mix of pre-defined logic plus AI, and—voila—it sends an email back with some “research”.

A workflow is a bit like a task you’ve laid out for a low-level employee. There might be a couple of steps where they need to stop and make a choice, but for the most part they’re just following a set of pre-defined paths.

One thing that sets workflows apart from assistants is that workflows are empowered to do things like update a database or perform an action in an application. However, the set of possible things they can do is limited to a predefined set of actions.

If you see someone on LinkedIn talking about how they “hired” 30 AI agents, this is usually what they’re talking about. Or if you happen to see a $250B company building an entire workforce of agents, this might also be what they’re talking about.

Agents

Ok, so now what’s an actual agent? I like how Kristina McMillan, EIR for GTM AI at Scale, defines them:

AI agents are goal-oriented systems that observe, decide, and act within a defined environment.

True agents are like moderately experienced employees. Instead of laying out a set of steps to take, you give them a goal, some guardrails and the tools they need to achieve that goal. It’s up to the employee to figure out how to achieve the goal using the available tools without going outside the guardrails.

AI SDRs are (theoretically) examples of this kind of agent. You give them a goal like “work these accounts and set as many demos as you can”. The tools might be access to an email account and CRM. The agent then gets to decide the steps it takes and how it uses the tools at its disposal to try to be successful.

Like workflows, agents do things. However, the scope of what they can do is much more complex. They have longer “memories” (e.g. what they said to a prospect last week), can pause between tasks (e.g. waiting for a response from a prospect), and may even choose to loop in a human at certain steps—all in the service of achieving their goal. Most importantly, they have the latitude to decide what to do to help them achieve their goal.

Right now, very few AI tools branded as “agents” actually meet this threshold. Most of the “agents” in the screenshots above are somewhere between assistants and workflows.

Ok, so now that we’ve got some different categories, how do we actually get some of this sweet, sweet agentic behavior for our teams?

Platforms and agents and apps, oh my

Clari, Gong, etc aim to be GTM platforms. Over the years they’ve built new capabilities and integrated tons of acquired functionality to get there. When it comes to agents, they’re pitching you on using the agents they’ve built inside their platforms. These agents are additional platform features—integrated, pretty easy to use and designed to keep you using all their other capabilities.

Other systems, like Zapier and n8n, are agent platforms. They give you the capability to build agentic systems for your specific use case. That means the ability to customize logic and integrate with other systems. They’re super modular and they don’t particularly care if you’re building GTM agents or some other workflow entirely. While they don’t require code, using them is a lot closer to programming than it is to just pushing a button.

Salesforce is some of both. It’s a GTM platform with an agent platform built inside it.

So what about all your other apps and systems? They’ll probably offer some agent-like functionality but they’ll also want to be part of the workflow for any future agentic systems. That means they’ll need to be usable by AI. And that means they need to become tools.

Tools and integrations

Tools let agentic systems take actions like looking up data or changing something. The systems, services and applications your team uses might make certain tools available to an AI model to do things.

Think of these tools as buttons or menu items made available by some application for AI to discover and use1. Without these tools, agents couldn’t really do anything useful. With these tools, you can have them do all sorts of things on your behalf.

Here’s a more concrete example. Slack could provide a “tool” that allows an AI model to send a message. Imagine an agent has been told to “send a Slack message letting the rep know you’re done”. Once the agent has completed its main task, it might compose a message saying “@rep I’m done!”, look through its menu of tools, find one called “send_slack_message”, and use that to send the message it wrote.

This ability is incredibly powerful. In a non-AI world, a developer would have had to carefully code that kind of behavior. They would have needed a way to recognize that the user wants to send a Slack message, who they want to mention, and then carefully craft an API call to Slack to make sure the message is sent properly. In an AI world, the AI model can “just figure it out” and do the right thing using the available tools.

You might ask how these tools become “available” to the AI. The answer is that’s still very much evolving.

Until recently, integrating a particular tool with AI required a custom process. Platforms like Zapier and n8n have built hundreds of customized integrations to support these kinds of connections. OpenAI has some built-in connections to things like Google Drive and OneDrive. All of those are proprietary and custom.

That’s started to change with a technology called MCP2. It was developed by Anthropic and announced in late 2024 but is now being adopted by all the major AI vendors. Here’s the official description:

Think of MCP like a USB-C port for AI applications. Just as USB-C provides a standardized way to connect your devices to various peripherals and accessories, MCP provides a standardized way to connect AI models to different data sources and tools.

The idea here is that as long as applications provide their tools in a standardized way, AI models can use them. This isn’t just startups hacking stuff together—HubSpot recently launched beta support for MCP so AI can directly interact with HubSpot to do things like query records and update data.

It seems plausible that MCP will gain wide adoption with many of the applications in your GTM stack supporting it. That will make building powerful agents much easier.

One thing you can do today is ask your vendors if they have an MCP server. Right now the answer is probably “no”. That’s likely to change pretty quickly.

This all sounds great. So what’s the catch? The catch is the real world.

Agent meets world

You know how MCP described itself as USB for AI applications? Sounds great, right? By the way, how much does your infosec team like it if you stick random USB devices into your work laptop?

Your GTM systems contain lots of sensitive data—personally identifiable information, financial data, trade secrets. There are plenty of legal and fiduciary reasons to protect that stuff.

These systems also contain sensitive capabilities. You wouldn’t really want a rogue AI SDR to start spamming executives at your million dollar customers or to cancel a contract in your ERP system.

You shouldn’t be surprised if your CIO/CISO is wary of potential problems. These problems aren’t just risk-averse security folks being a stick in the mud—they’re real.

Historically when two systems connected to each other, there was a clear technical “contract” in the form of an API that defined what each system could do.

AI doesn’t work like that.

Working with an AI agent is more like hiring a person. Once you hire them, you give them the least possible access3 to the systems they need to do their job and then let them take the actions they see fit. The difference with AI? You can’t do a background check on this “person”, you can’t get references, you can’t build trust over time, you can’t send them to jail if they do something illegal. You can fire them if they make a big mistake, but they won’t care.

And that’s if everything goes well and nobody’s actively trying to exploit all this immature technology to do anything bad.

No wonder security folks always seem a little tense.

If you’re looking to deploy agents, be prepared to work with your security and IT teams. Help them understand the business impact you think you can get from any new agent and work with them when they identify security issues. These issues will be easier with agents that are fully internal to platforms you already trust and more severe for agents that need to reach across systems.

You’ll need to find some compromises on both sides. If it becomes a fight, it’s going to be a long few years while the technology stabilizes and sorts itself out.

Wrapping up

As you work with your teams and stakeholders to introduce agents make sure you understand what you actually need: an assistant, a workflow or a true agent.

Then work with ops to understand if those capabilities are something you can get from a platform you’ve already got or if you’ll need to orchestrate something new.

Finally, treat any agent that accesses your systems like a new hire. Make sure you know what tools that agent will need to do its job and work with your security and IT teams to make sure it can safely access them.

Agentic AI systems really will change sales—they’re a key part of achieving massive GTM efficiency gains. It’s going to be worth it in the long run, but recognize that today’s technology is extremely immature and has real risk. It’s going to a bumpy ride for a while yet.

Not literally. They’re actually more like API calls but that’s the general idea.

Model Context Protocol, but who wants to write that out when you can invent another acronym.

This is called the principle of least privilege. It’s why your sales reps aren’t Salesforce admins and your interns can’t run payroll.